In 2020, a project from Israel’s Neuro-Biomorphic Engineering Lab and ALYN hospital caught the attention of technology giants Accenture and Intel. The research project aims to create a wheelchair-mounted robotic arm with adaptive controls. The two industry leaders announced their support for this project by providing funding and algorithms developed by their neuromorphic computing hardware. What are they trying to accomplish? An increased sense of independence for wheelchair users at a significantly reduced cost.

Neuromorphic computing is one of the many areas of research that can fall under the ‘technology for good’ category. It is a promising frontier for technologists who want to deliver adaptive and energy-efficient AI. Furthermore, the technology can help create smart products that are easier to interact with. How exactly will this help change the face of AI moving forward?

Understanding neuromorphic computing

Neuromorphic computing uses the characteristics of biological nervous systems as a model to develop artificial neural networks. It aims to emulate the brain’s neural structure that can handle uncertainty, ambiguity, and contradiction. However, this is quite a complicated process. A wide range of disciplines work collaboratively to drive neuromorphic computing. These range from materials science, device physics, electrical engineering to computer science and neuroscience.

Here are a few advantages of developing neuromorphic chipsets as compared to the current AI systems:

- Low latency system: Neuromorphic chipsets are excellent for continuous data streaming. They do not require external data transfer for analysis.

- Adaptive computing: Their architecture allows devices to adapt to changing circumstances.

- Quick learning capability: Neuromorphic systems have been able to learn quickly with little data. This is far better than what conventional AI can do.

Frontrunners bridging the gap between neuromorphic computing and leading-edge AI

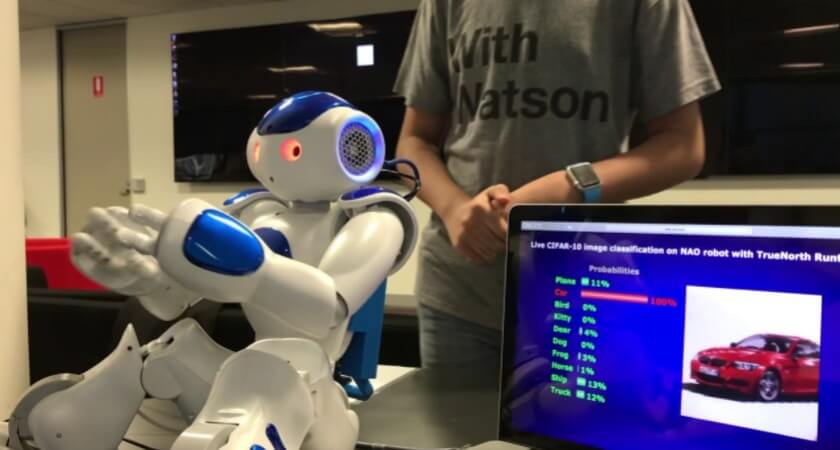

IBM TrueNorth: A chip that can mimic the human brain

Source: IBM Research

The human brain contains over 400,000 km of nerve fiber. This allows the brain to fire a quintillion calculations per second. Notably, this is equal to one exaflop in computing terms. On the other hand, IBM-designed Summit, the fastest supercomputer in the world, is capable of 200 quadrillion calculations per second or 200 petaflop. This is 600,000 times faster than the CPU in an iPhone X.

Computers are far more superior to humans at performing specific specialized tasks. However, they do not come close to humans when it comes to cognitive faculties like perception imagination and consciousness. But, IBM aims to mimic the neurobiological architecture of the brain. TrueNorth chip is a product of 16 years old (ongoing) research from scientists at IBM in California.

Here’s a timeline to put things into perspective. By 2011, IBM built two prototype chips called Golden Gate and San Francisco. Each chip contained 256 neurons – the size of a worm’s nervous system. Despite the limited number of neurons, the chips were capable of simple cognitive exercises. They could play pong and recognize handwritten digits. by 2013, the team shrunk the components of the Golden Gate chip by 15 fold. They also reduced the power consumption by 100 fold. This acted as the base for the TrueNorth chip equipped with cores that can operate independently, and in parallel with one another.

Today, TrueNorth is the second-largest chip IBM has ever produced and yet it consumes just 73 milliwatts. In fact, this is a thousand times less than a typical CPU. It has proven to be incredibly proficient at machine learning applications such as image recognition.

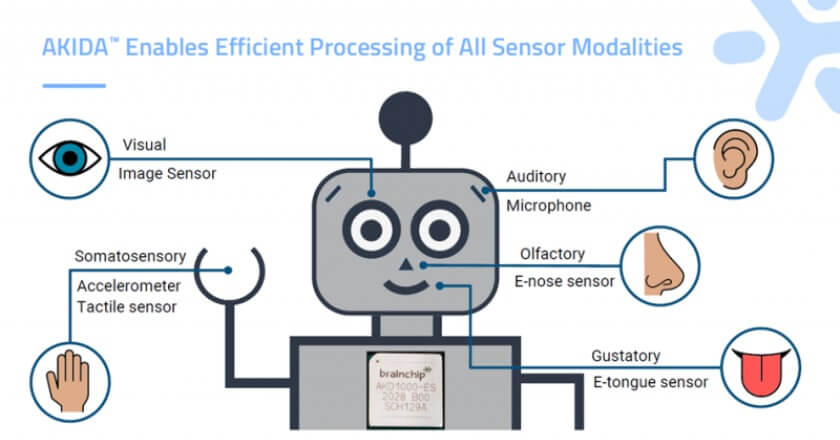

BrainChip Akida: Technological capability that can improve the human condition

Source: Edge AI Vision

During this year’s AI Hardware: Executive Outlook Summit, held in early December, BrainChip’s CTO Peter van der Made was the winner of the AI Hardware 2021 Innovator Award. By developing the Akida neural processor and commercializing the world’s first neuromorphic processing technology, BrainChip is revolutionizing the capabilities of AI at the edge.

Brainchip’s Akida processing architecture is both scalable and flexible to address the requirements in edge devices. In fact, it can provide a complete ultra-low power AI Edge Network for vision, audio, olfactory, and smart transducer applications. Furthermore, Akida acts as a quick-response system that can reduce the large carbon footprint of data centers.

In November this year, Brainchip announced a partnership with Japanese semiconductor company MegaChips. Moving forward, the company will utilize the Akida platform to develop AI-based solutions for edge computing. BrainChip’s revolutionary innovation can allow developers to create automotive sensors and cybersecurity applications for smart devices. It also enables highly advanced IoT tech across a diverse range of industries.

Read more: AI in cybersecurity

Intel Neuromorphic Computing Research: Beating traditional processors by a factor of 2,000

Source: Intel

In September this year, Intel released the newest iteration of its neuromorphic hardware, called Loihi. This next-generation neuromorphic processor behaves a lot like neurons. Accenture, Hitachi, GE, and over 75 organizations (including several Fortune 500 companies) have come together to pioneer the basic tools and algorithms required to make Intel’s neuromorphic technology useful. Besides, this collaboration between major technology companies is a part of the Intel Neuromorphic Research Community (INRC).

This neuromorphic computing collaboration comes at a time when radical research is most needed for the advancement of microchip technology. Through INRC, leading researchers from academia, industry, and government tackle the challenges facing the field of neuromorphic computing. Eventually, the idea is to leverage the insights that come from this customer-centric research to inform the designs of future Intel processors and AI systems.

According to Mike Davies, Director of Intel’s Neuromorphic Computing Lab, “Neuromorphic chipsets come with several advantages that have a rather promising application frontier. From visual sensing, robotics in factories and human-computer interfaces to a distributed intelligence across a variety of industries – the possibilities are vast.”

Towards a more powerful, more human AI

AI has already transformed the way we work and live. From biometric systems and virtual assistants to large-scale data security and fraud detection, AI has now become an integral part of our daily lives. With neuromorphic computing, AI applications in more complex fields, such as remote sensing and intelligence analysis will improve.

Besides, the global neuromorphic computing market could reach USD 8.58 Bn by 2030, growing at 79.0% CAGR. Neuromorphic AI is a radical path towards ensuring the exponential growth of machine intelligence and human augmentation, which also helps drive conversations around general AI (AGI) and possibilities that lie ahead.

Enterprises are increasingly relying on extensive AI applications to address a variety of business needs. What will your next move be? Is your company prepared to thrive in a world of technological disruption and digital dominance? With Netscribes’ technology and innovation research solutions, you can stay prepared for the next wave of technology requirements and the business landscape. To learn more, get in touch with us.